– THE PROFESSIONAL WAY TO LEARN PYSPARK FOR WORK –

Apache Spark

Certification Training

A premium PySpark course to prepare you for becoming a Databricks Certified Associate Developer

What Learners Say

What's included?

“Let's get you certified!”

Meet Florian Roscheck

“I can't believe I don't know anything about big data processing!” – this is what set me off on an exciting journey to learn Apache Spark. Today, I have taught more than 5,000 students how to pass the Databricks Spark certification with my popular practice exams.

As a Sr. Data Scientist at a major consumer goods company in Germany, I currently apply big data models with my data team in a business context. Sustainability is an important topic for me, not only since working in California as a data scientist at a renewable energy company.

I love that Apache Spark is open-source and volunteer for promoting open practices in research, data, and scientific computing at NumFOCUS.

As a Sr. Data Scientist at a major consumer goods company in Germany, I currently apply big data models with my data team in a business context. Sustainability is an important topic for me, not only since working in California as a data scientist at a renewable energy company.

I love that Apache Spark is open-source and volunteer for promoting open practices in research, data, and scientific computing at NumFOCUS.

Write your awesome label here.

Course Content

Take your Apache Spark skills to the next level!

Apache Spark Certification Training

Ready to boost your profile as a data professional with verified, job-ready Apache Spark skills?

“Is it worth it?”

...a question you or your employer might ask.

FAQ

What format is the course in?

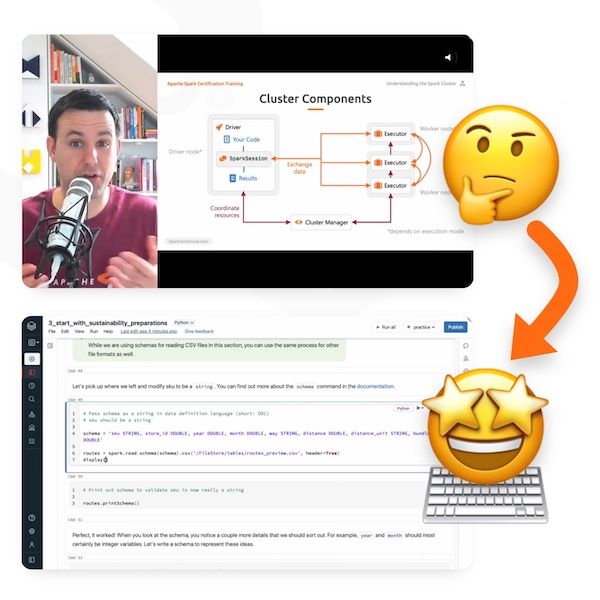

The course consists of videos, interactive coding exercises, and practice questions, incl. practice exams. This content is distributed over 18 modules, alternating between a focus on theory and practice, helping to keep you interested and engaged.

There are 14.5 hours of video content distributed over 96 HD videos. The videos are used for different purposes. One purpose is to be a guided educational tour of Spark's architecture. Another purpose is to allow you to "learn by doing". In part of the videos, you will be coding along in your browser as you watch Florian write and explain Apache Spark code. This allows you to use and learn Apache Spark in an intuitive, practical way.

The 20 interactive coding exercises in this course each consist of an instructional video, an interactive notebook, an evaluation script, and a solution video. In the instructional video, you will read the instruction for the exercise together with Florian and he will give you tips on how to best solve the exercise. Then, you will solve the exercises on your own in an interactive coding environment in your browser. When you submit code that is not (yet) solving the exercise, you get valuable feedback specifying what you can improve based on an evaluation script that is provided in the course. Finally, independent of if you figured out the solution to the exercise or not, Florian will show you his approach to solving the exercise. All exercises are ungraded and you get the opportunity to repeat them as many times as possible for you to feel fit for the Databricks exam.

At the end of each module, you will find a couple of practice questions that check whether you understood the content of the module. When you provide a wrong answer, you will get an explanation about why your answer was wrong, allowing you to learn from your mistakes.

The 3 original practice tests in this course help to prepare you for the environment and content of the Databricks certification in a targeted way. You will get familiar with the question format of the Databricks certification as well as the breadth of topics that it covers. The greatest opportunity for you here is to take advantage of the many thorough explanations of every answer of the, all in all, 180 exam questions. Once you submit an answer, you will get immediate feedback about if your answer was right and, if it was wrong, what was wrong with your answer. This targeted feedback will give you a chance to rapidly improve your understanding of PySpark, setting you up for an express path to pass the Databricks certification and nurture your big data career.

There are 14.5 hours of video content distributed over 96 HD videos. The videos are used for different purposes. One purpose is to be a guided educational tour of Spark's architecture. Another purpose is to allow you to "learn by doing". In part of the videos, you will be coding along in your browser as you watch Florian write and explain Apache Spark code. This allows you to use and learn Apache Spark in an intuitive, practical way.

The 20 interactive coding exercises in this course each consist of an instructional video, an interactive notebook, an evaluation script, and a solution video. In the instructional video, you will read the instruction for the exercise together with Florian and he will give you tips on how to best solve the exercise. Then, you will solve the exercises on your own in an interactive coding environment in your browser. When you submit code that is not (yet) solving the exercise, you get valuable feedback specifying what you can improve based on an evaluation script that is provided in the course. Finally, independent of if you figured out the solution to the exercise or not, Florian will show you his approach to solving the exercise. All exercises are ungraded and you get the opportunity to repeat them as many times as possible for you to feel fit for the Databricks exam.

At the end of each module, you will find a couple of practice questions that check whether you understood the content of the module. When you provide a wrong answer, you will get an explanation about why your answer was wrong, allowing you to learn from your mistakes.

The 3 original practice tests in this course help to prepare you for the environment and content of the Databricks certification in a targeted way. You will get familiar with the question format of the Databricks certification as well as the breadth of topics that it covers. The greatest opportunity for you here is to take advantage of the many thorough explanations of every answer of the, all in all, 180 exam questions. Once you submit an answer, you will get immediate feedback about if your answer was right and, if it was wrong, what was wrong with your answer. This targeted feedback will give you a chance to rapidly improve your understanding of PySpark, setting you up for an express path to pass the Databricks certification and nurture your big data career.

Is there a discount for students?

If you are a student and are interested in picking up solid Apache Spark skills, then, first of all, I congratulate you on your wise decision. Second, please reach out to florian@headindata.com with proof that you are a student who is currently enrolled in an educational institution (e.g. a photo of your valid student ID card), so I can provide you with an individualized coupon.

Is Apache Spark free to use in this course?

Yes and not only in this course! Apache Spark is distributed under the Apache License 2.0. This is a license approved by the open source initiative(R) which means that Apache Spark is allowed to be freely used, modified, and shared. Apache Spark is free for commercial use, based on the conditions outlined in its license. You can read the full license in Apache Spark's repository on GitHub.

Is the Spark certification free?

No. The company Databricks charges $200 (US Dollars) to take the Databricks Certified Associate Developer for Apache Spark certification exam. This fee is charged by Databricks for every exam attempt. So, if you do not pass the certification exam on your first trial and would like to repeat the exam, you would have to pay the $200 again.

The $200 cost to take the Databricks certification is not included in the course price and needs to be paid to Databricks directly upon registration for the certification exam.

The $200 cost to take the Databricks certification is not included in the course price and needs to be paid to Databricks directly upon registration for the certification exam.

What do I need to prepare for the Spark certification in addition to this course?

This course will prepare you perfectly for taking the Databricks Spark certification. You do not need any additional resources to prepare for the Spark certification. This course will familiarize you with all the content of the exam, give you ample practice opportunities, includes a special section with exam tips & tricks, and shows you how to register for the exam.

If I notice the course is not for me, can I get my money back?

Yes, if after testing you believe the course is not for you, you can get your money back for up to 30 days after the purchase date. In this case, please reach out to florian@headindata.com with the email title "Course Refund" and state your user name and purchase date of the course. You can find more information in the Refund Policy.

Do you offer a certificate of completion?

Yes, upon completion of all lessons, exercises, and practice exams, you will receive a certificate of completion with your name on it.

I get bored by slow online courses and love to read instead of watching videos.

What can you offer me?

As an avid online learner myself, I know what you are talking about. Everybody has their own pace. I believe that the pace of the videos in the course is a good average pace that most students are happy with. But, you can accelerate or slow down video playback in the course player to adjust the pace to your liking.

If you would rather read instead of watch videos, there are two major opportunities: First, you will be happy to know that subtitles are available for all videos. For now, subtitles are in the original language of the videos, English. Second, you can also do all 6 labs without the videos. The solution notebooks we use in the labs include a lot of explanatory text. So, you could, for example, step through the labs without the videos by using the solution notebooks like "books", including explanatory texts and code snippets that help you understand Apache Spark.

If you would rather read instead of watch videos, there are two major opportunities: First, you will be happy to know that subtitles are available for all videos. For now, subtitles are in the original language of the videos, English. Second, you can also do all 6 labs without the videos. The solution notebooks we use in the labs include a lot of explanatory text. So, you could, for example, step through the labs without the videos by using the solution notebooks like "books", including explanatory texts and code snippets that help you understand Apache Spark.

Is learning Apache Spark still relevant?

Yes, absolutely. Big data skills are highly rewarded! In the 2022 Stack Overflow Developer Survey, Apache Spark was the top-paying technology in the category "Other Frameworks and Libraries". So, Apache Spark is still relevant if you are chasing a rewarding big data career.

In addition, state-of-the-art big data tools like Delta Lake are built on top of Apache Spark. The framework is even a top choice for integrating large data sets with increasingly complex machine learning pipelines built via Hugging Face and PyTorch. The foundational impact of Apache Spark on today's big data industry makes it an extremely relevant skill.

In this article on LinkedIn, I detail out how Spark is relevant today and in the future for big data processing.

In addition, state-of-the-art big data tools like Delta Lake are built on top of Apache Spark. The framework is even a top choice for integrating large data sets with increasingly complex machine learning pipelines built via Hugging Face and PyTorch. The foundational impact of Apache Spark on today's big data industry makes it an extremely relevant skill.

In this article on LinkedIn, I detail out how Spark is relevant today and in the future for big data processing.

How easy is it to learn Apache Spark?

With the right guidance, learning Apache Spark is easy. If you are familiar with SQL or Pandas, then learning Spark is, at least at the beginning, very intuitive. You will master the code relatively quickly and through guided instruction you will figure out the syntax differences rather sooner than later.

Yet, as you progress working with Spark, fundamental differences between other frameworks like Pandas and Spark become more apparent. Processing big data sets at scale quickly is not an easy task. For this reason, it pays off to invest time into understanding how Apache Spark works behind the scenes.

Studying the infrastructure of Apache Spark, for example with the resources in this course, will enable you to identify and push the right levers to build resilient, frustration-free, and scalable Spark code that reliably processes big data workloads. If you decide for taking advantage of clear, step-wise instruction on the architecture fundamentals of Spark like in this course, learning even the architecture of Apache Spark will not be hard.

Yet, as you progress working with Spark, fundamental differences between other frameworks like Pandas and Spark become more apparent. Processing big data sets at scale quickly is not an easy task. For this reason, it pays off to invest time into understanding how Apache Spark works behind the scenes.

Studying the infrastructure of Apache Spark, for example with the resources in this course, will enable you to identify and push the right levers to build resilient, frustration-free, and scalable Spark code that reliably processes big data workloads. If you decide for taking advantage of clear, step-wise instruction on the architecture fundamentals of Spark like in this course, learning even the architecture of Apache Spark will not be hard.

Is the Spark certification worth it?

Motivations for taking the Apache Spark certification differ.

A popular reason to take the Spark certification is to improve career opportunities. The Databricks Spark certification helps employers understand quickly that you know something about Apache Spark. It also shows that you have the discipline to learn and improve your knowledge – a valuable skill in the rapidly changing world of IT.

Another great reason to take the Spark certification is to set an achievable personal goal for your learning journey of Apache Spark. The popular Databricks Spark certification revolves around a well-rounded curriculum that provides you with the theoretical and practical knowledge you can build big data projects on. It is this broad spectrum, this well-calibrated mix of knowledge, that makes the Spark certification such a good goal for somebody who wants to get to know Spark.

A popular reason to take the Spark certification is to improve career opportunities. The Databricks Spark certification helps employers understand quickly that you know something about Apache Spark. It also shows that you have the discipline to learn and improve your knowledge – a valuable skill in the rapidly changing world of IT.

Another great reason to take the Spark certification is to set an achievable personal goal for your learning journey of Apache Spark. The popular Databricks Spark certification revolves around a well-rounded curriculum that provides you with the theoretical and practical knowledge you can build big data projects on. It is this broad spectrum, this well-calibrated mix of knowledge, that makes the Spark certification such a good goal for somebody who wants to get to know Spark.

Should I go for the Databricks or the Cloudera Spark certification?

Which Spark certification is best?

There are 2 popular certifications for Apache Spark: The Databricks Certified Associate Developer for Apache Spark certification and the Cloudera CCA Spark and Hadoop Developer Exam (CCA175).

When comparing Cloudera vs. Databricks on Google Trends, you can see that since about February 2020, the search volume for "databricks" has overtaken the search volume for "cloudera". Recently, Databricks is widely more searched for than Cloudera, suggesting the interpretation that Databricks is a company better known than Cloudera.

Thus, taking the Databricks certification may make it easier for others to recognize your big data skills.

When comparing Cloudera vs. Databricks on Google Trends, you can see that since about February 2020, the search volume for "databricks" has overtaken the search volume for "cloudera". Recently, Databricks is widely more searched for than Cloudera, suggesting the interpretation that Databricks is a company better known than Cloudera.

Thus, taking the Databricks certification may make it easier for others to recognize your big data skills.

Can I run Apache Spark on my own computer?

In principle, yes. In this article, Matthew Powers describes how you can install PySpark on a Mac. Matthew rightfully says that "Creating a local PySpark [...] setup can be a bit tricky [...]." Lucky for us, we do not need to create a local setup in this course.

Also, think about this: Spark is specifically a framework for distributed big-data processing. If you are running Spark on your laptop (which is explicitly not a network of distributed nodes), you are missing out on experiencing the powers of Spark for yourself!

This is why, in this course, we will work with Databricks' free Community Edition. With this approach, you will already get started with running Spark in a realistic, professional environment – just like one you might use at work.

Also, think about this: Spark is specifically a framework for distributed big-data processing. If you are running Spark on your laptop (which is explicitly not a network of distributed nodes), you are missing out on experiencing the powers of Spark for yourself!

This is why, in this course, we will work with Databricks' free Community Edition. With this approach, you will already get started with running Spark in a realistic, professional environment – just like one you might use at work.

Is this course affiliated with Databricks?

No. This course is not affiliated with or sponsored by Databricks.

What is Apache Spark?

Apache Spark is a powerful open-source framework designed to process and analyze large amounts of data quickly and efficiently. It provides a distributed computing environment, meaning it can divide the workload across multiple computers or servers, enabling faster processing speeds. Apache Spark supports various data processing tasks, like reading and writing data from and to different data sources.

Spark is also great for running data analytics tasks on very large datasets (e.g. from gigabyte to petabyte-scale). It also supports real-time streaming and machine learning, making it a versatile solution for handling diverse data challenges.

Spark is also great for running data analytics tasks on very large datasets (e.g. from gigabyte to petabyte-scale). It also supports real-time streaming and machine learning, making it a versatile solution for handling diverse data challenges.

Why is Apache Spark so popular?

Apache Spark is immensely popular for several reasons. Firstly, it is the go-to choice for leading companies like Apple, Netflix, Meta (formerly Facebook), and Uber, which rely on its capabilities to process massive amounts of big data. As the demand for collecting and analyzing large datasets continues to grow, Spark has become crucial in enabling organizations to handle these tasks efficiently.

Spark's versatility is another key factor in its popularity. It supports various modern use cases, such as real-time streaming and machine learning, making it a comprehensive solution for diverse data processing needs.

As a result, Spark education is highly valued. As Spark becomes increasingly prevalent in the industry, acquiring skills and knowledge in Spark can greatly enhance one's career prospects and enable individuals to contribute to the growing field of big data engineering and analytics.

Spark's versatility is another key factor in its popularity. It supports various modern use cases, such as real-time streaming and machine learning, making it a comprehensive solution for diverse data processing needs.

As a result, Spark education is highly valued. As Spark becomes increasingly prevalent in the industry, acquiring skills and knowledge in Spark can greatly enhance one's career prospects and enable individuals to contribute to the growing field of big data engineering and analytics.

Are Apache Spark and PySpark the same thing?

No, Apache Spark and PySpark are not the same thing, but they are closely related.

Apache Spark is an open-source distributed computing system designed for big data processing and analytics. PySpark is the Python library for Apache Spark. It allows developers to write Spark applications using Python, leveraging the power and capabilities of Spark's distributed computing framework.

For processing big data workloads, Spark goes far beyond Python's typical data processing libraries like Pandas. Spark can also be used via other programming languages like Scala, so not only Python.

In summary, Apache Spark is the overarching framework, while PySpark is a specific implementation for Python developers to work with Spark.

Apache Spark is an open-source distributed computing system designed for big data processing and analytics. PySpark is the Python library for Apache Spark. It allows developers to write Spark applications using Python, leveraging the power and capabilities of Spark's distributed computing framework.

For processing big data workloads, Spark goes far beyond Python's typical data processing libraries like Pandas. Spark can also be used via other programming languages like Scala, so not only Python.

In summary, Apache Spark is the overarching framework, while PySpark is a specific implementation for Python developers to work with Spark.

Disclaimer

Neither this course nor the certification are endorsed by the Apache Software Foundation. The "Spark", "Apache Spark" and the Spark logo are trademarks of the Apache Software Foundation.

This course is not sponsored by or affiliated with Databricks.